DotLiDAR: High-fidelity, Rapid, Multi-device 6DOF Tracking Using Infrared LiDAR Image Sensing

Final Project - 15862 Computational Photography

I took one of the most fun classes in CMU, in my opinion, Computational Photography by Ioannis Gkioulekas. For the final project, I worked on a project called DotLiDAR. The project is about tracking the 6DOF of a moving device from an observer device using projections, especially repurposing the light emission from LiDAR that is already used in iPhone AR apps! This final project got selected as one of the winners, which takes me a Nikon D3500 DSLR as a prize and I carry it around in my travels.

LiDAR emits light pulses and receives the bounced-back signals to measure the distance to obstacles. This light from LiDAR can be captured from an infrared camera when it projects on the wall, floor, desk, etc. If the projector (i.e., LiDAR) moves, we can easily see distorted projections. But an interesting part comes here - If you see the projected pattern from the projector's perspective, this remains the same. - Our idea is that we use perspective-n-point for a light projector by modeling the projector as a virtual camera. This gives us the relative 6DOF position and orientation of the projector from the camera's perspective.

The (significantly) extended version is published at CHI 2025, so check it out!

Photo zone 📸

Also check out some cute photos while I am taking this camera around the world.

UISTers & Burghers (Oct 15, 2024)

Vatnajökull National Park (Jan 11, 2024)

Northern lights in Iceland (Jan 8, 2024)

Sólheimasandur (Jan 10, 2024)

Ice cave (Jan 11, 2024)

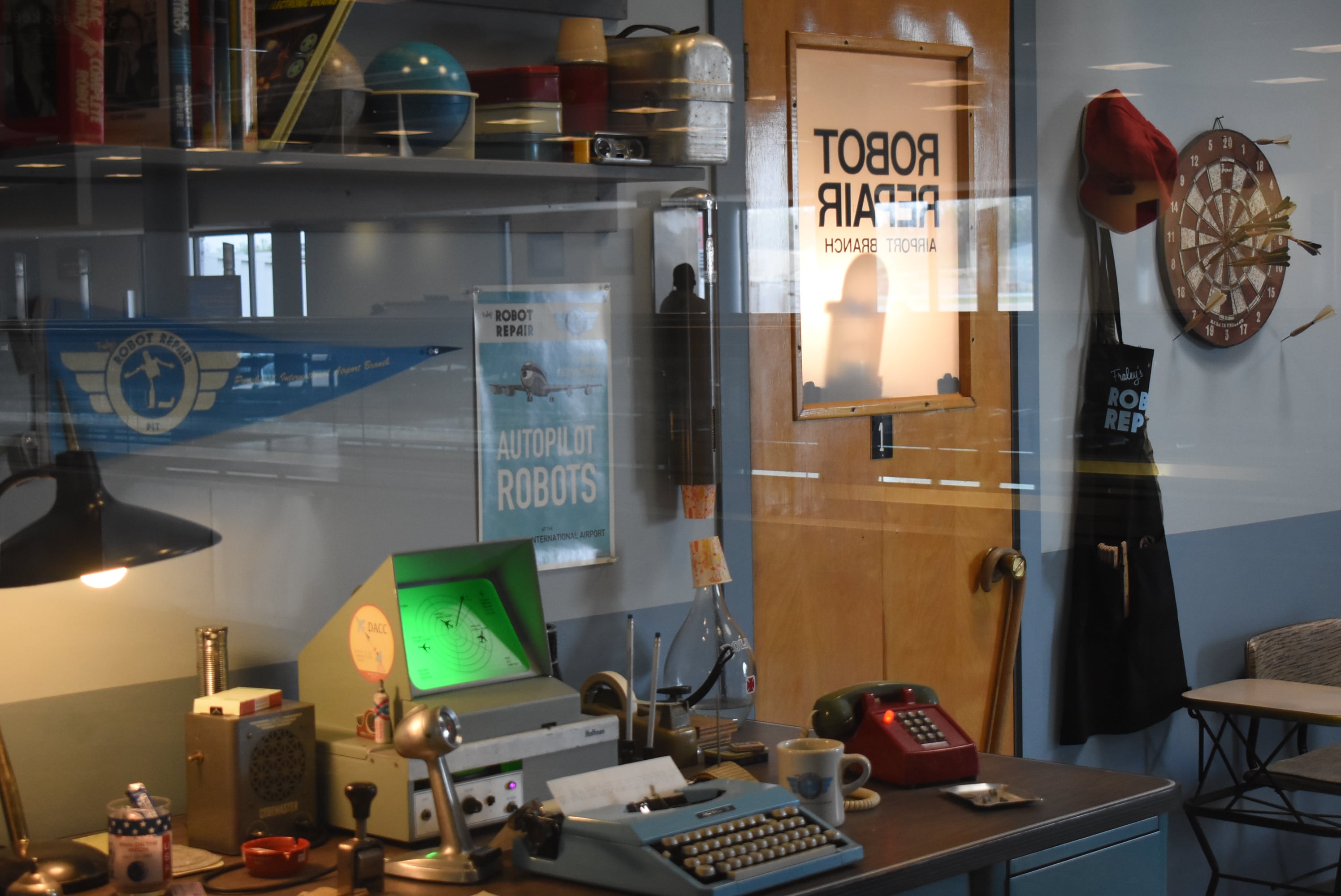

Robot repair shop at Pittsburgh airport (April 21, 2023)

Wizard of cat (Nov 9, 2024)

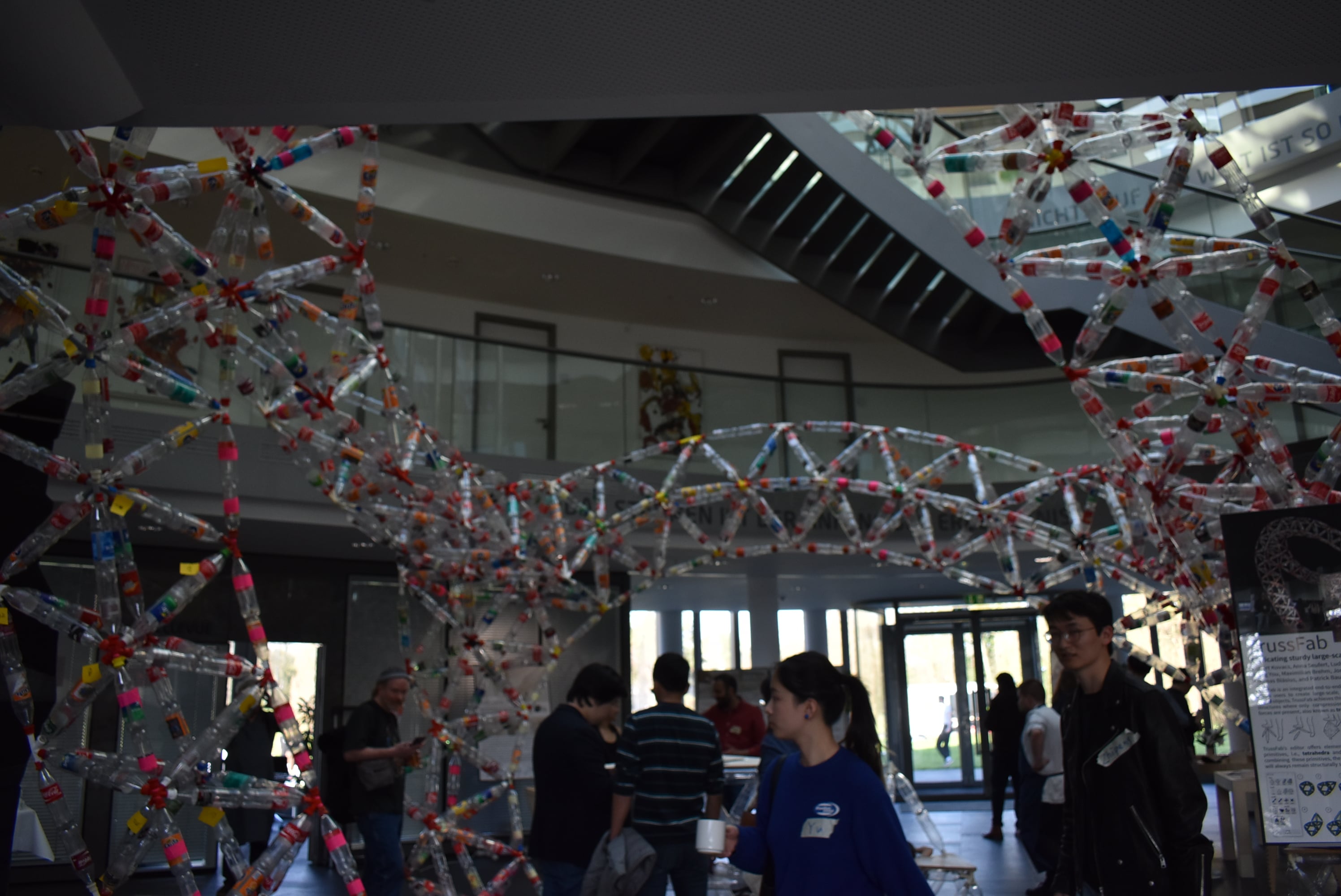

Dinosaur of Hasso Plattner Institute (April 22, 2023)

Leap (April 30, 2023)

Reynisfjara (Jan 12, 2024)

nice Nice (April 29, 2023)

Designed by Daehwa